Common measures of reliability include internal consistency, test-retest, and inter-rater reliabilities. Reliability refers to the degree to which an instrument yields consistent results. Often times, when developing, modifying, and interpreting the validity of a given instrument, rather than view or test each type of validity individually, researchers and evaluators test for evidence of several different forms of validity, collectively (e.g., see Samuel Messick’s work regarding validity). A common measurement of this type of validity is the correlation coefficient between two measures.

Subject matter expert review is often a good first step in instrument development to assess content validity, in relation to the area or field you are studying. Content validity indicates the extent to which items adequately measure or represent the content of the property or trait that the researcher wishes to measure.Three common types of validity for researchers and evaluators to consider are content, construct, and criterion validities. Validity refers to the degree to which an instrument accurately measures what it intends to measure. Attention to these considerations helps to insure the quality of your measurement and of the data collected for your study.

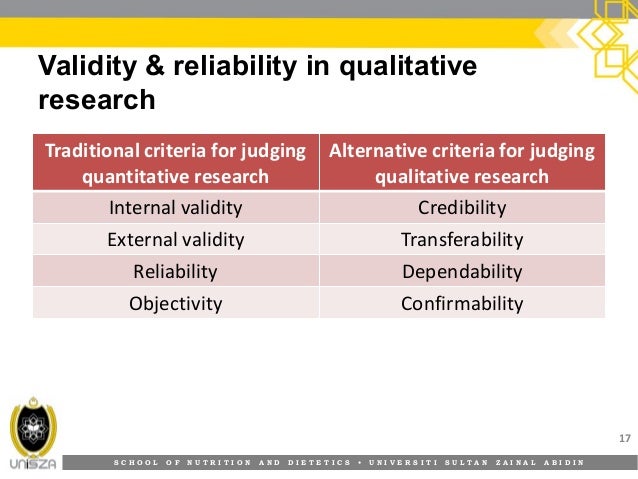

Validity and reliability are two important factors to consider when developing and testing any instrument (e.g., content assessment test, questionnaire) for use in a study. How to Determine the Validity and Reliability of an Instrument

0 kommentar(er)

0 kommentar(er)